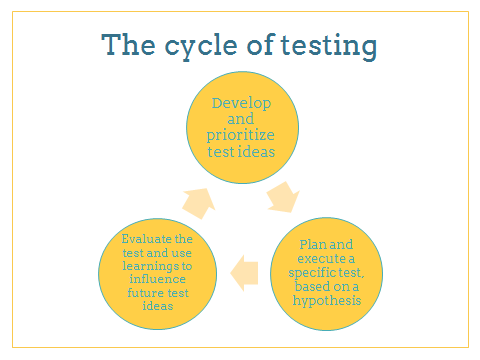

Testing is the most powerful tool we have to build a data-driven program. It’s what takes us from guesstimation to fact, from “this is how we’ve always done it” to “this is how we should be doing it.” But only if it’s done right.

A bad test isn’t just a waste of resources—if it leads you down the wrong path it’s worse than no test at all.

So! We’ve put together a quick and easy testing guide to help you make sure you’re getting the most out of your experimentation.

Step 1: Define Your Goals

This could be as simple as saying you want to generate a higher response rate to your emails, or it could be as complicated as growing your sustainer program over the next year from various channels. Every time you have a test idea, you should ask yourself: how does this support my long-term priorities?

Step 2: Research

How is your program doing already? If you don’t know what’s broken, you won’t know what to fix.

Study up on your own program by collecting direct data, including looking at email or website performance via analytics, installing heat mapping software on key pages, and running usability testing.

You can also learn a lot about your own program by snooping around your competitors’ websites. Really take a close look at the site’s user flow. Does the donation form design facilitate an easy transition from learning about the organization to donating? Do you feel compelled to take action? Is it easy to learn about the organization’s mission, and what makes that easy (or difficult)? Is it easy to sign up for email? Pay attention to what works and what really doesn’t.

Reading blogs or articles about trends in the industry (like this one!), attending webinars, and generally being aware of the internet in 2017 is all research! Just by experiencing the internet—shopping on Amazon, using Facebook or Instagram, and donating to causes you care about—makes you part of a larger community of internet users. Pay attention to annoying quirks on those sites—and don’t replicate them in your own sites and emails!

Finally, there’s nothing like another pair of eyes to help you see things you’ve missed. Enlist a coworker to glance at your page or email for 10 seconds (seriously—no more than that!) and ask them what it was all about. Then let them complete whatever task you want constituents to do—whether that’s donating, taking action, sharing, or navigating the site. Take notes on errors, challenges, and things they don’t like about the experience. All of those points of friction are great starting places for test ideas!

Step 3: Develop Some Test Ideas

Examine your direct and indirect data to find places where you might be under-performing (it helps to compare your rates to industry Benchmarks) and make a list of where you could potentially run some tests. These are typically in email messages, on high-trafficked portions of your website, and your donation, action, and sign-up pages.

Now, using what you learned in the research phase, develop some ideas for how you could improve those areas. Remember that every idea should support one of your goals.

Step 4: Refine and Prioritize

It’s true, we can’t test every single little thing. We just don’t have the time or the money. This is where we employ the power of the hypothesis to help us suss out whether or not a test is going to have enough impact to be worthwhile.

The purpose of hypotheses is to make sure we’re thinking critically about what we want to test and why and to make sure we learn from the test.

To help you create a hypothesis for your idea, fill out this formula:

Changing [control variable] to [test variation] will improve [test objective] because [reason you think the change will help].

For example, say we were testing renewal language for prospects. Our thinking is that we could get our prospects to convert to donors by making them feel like they had already committed to the organization. Therefore, the hypothesis would look like this:

Changing the traditional copy to include renewal language will boost response rate because donors will feel compelled to maintain their support (even if they haven’t contributed previously).

If you craft a good hypothesis, you will learn something from every test, regardless of the outcome.

One final note: Try to focus in on a few big ideas that could have a major impact on the user’s experience, but try not to change too many variables at one time. That will make it hard to determine which specific change had the impact.

Step 5: Plan Your Test (and Let It Fly!)

Take a look at your existing audience size—either your email file size or your website traffic—and determine how long you’ll need to run the test for. It can be a little intimidating at first, but it’s well worth getting familiar with checking for minimum detectable effect, to make sure we have a shot at getting statistically significant results.

Once you have your audience identified, one of the the most important parts of setting up a test is to make sure your audience is properly randomized—whether that be by splitting your list into static testing groups, or running your experiment through a third-party software, such as Optimizely or Google Optimize.

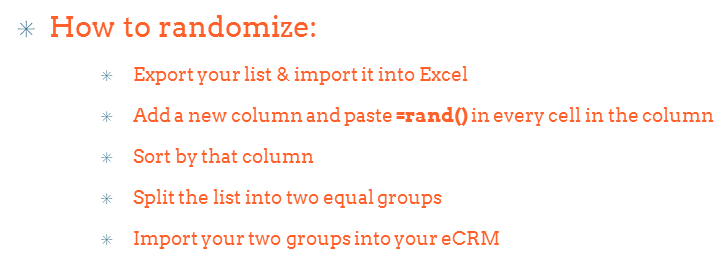

How to randomize an email list (if you don’t have a built in A/B testing tool):

Once you’ve set up your test (and run it through a good amount of QA!), launch it into the world!

Helpful reminders:

- If you plan to look at test results by segment, or track specific behavior (like button clicks) make sure that data is sorted ahead of time.

- Although we often say “test” and “control” when we’re talking about a test amongst ourselves, the distinctions are arbitrary; it can be helpful to name your groups with what you are testing (e.g. “$5 ask group” and “$10 ask group”).

- Don’t stop the test too early or check results frequently. The more you check, the greater likelihood you’ll get a false positive (aka fake results).

Step 6: Analyze Your Results

This is the best part! (says the data analyst)

We typically use a calculation called “revenue per visitor” or “revenue per recipient” to determine if our fundraising test was successful at raising more money overall. This helps us assign value to everyone who was exposed to the test, regardless of whether or not they converted, to tell us which was really more successful in generating more revenue.

However, if you’re only looking at a rate like clickthrough rate or sign-up rate, you can use a chi-squared test. And then, for average gift, there’s our two tailed t-test.

If your results are significant, great! You have a winner, and can decide how you want to roll it out. If they’re not… womp womp. It’s possible that:

You don’t have enough data

Maybe the impact of your changed variable isn’t as big as you thought and given your audience size, you couldn’t detect the difference.

Or there’s actually no difference!

Don’t forget, if you were testing a basic message v. a labor-intensive, or costly implementation, it might be good news that there is no difference.

What if something that always works doesn’t work this time?

There’s a third possibility: Random chance. Maybe it works 95 times out of 100, but this is one of those remaining 5 when it didn’t work. Sucks, but that’s statistics!

If your test did not yield significant results but you need to make a decision about what to use moving forward, be up front about what you’re doing (and ideally decide on the approach before you run the test).

Never ever ever ever say: Results were not statistically significant BUT Version B raised more money. Most people will just hear “Version B raised more money”… when really, there was no detectable difference between the results.

Happy testing! We won’t even quiz you on what you’ve learned!